Chromatography is one of the foundational analytical tools used to evaluate the composition and purity of chemical substances. By separating individual components based on their interactions with stationary and mobile phases, it enables laboratories to confirm the identity of an active compound and detect even minimal levels of impurities.

This article explains the core mechanisms behind chromatographic separation, reviews the principal analytical techniques used in pharmaceutical settings, and highlights why chromatography remains the most reliable approach for assessing purity and ensuring compound integrity.

What chromatography is

Chromatography is an analytical separation technique used to identify, quantify, and verify the purity of chemical compounds. At its core, chromatography exploits the fact that different molecules interact differently with two phases:

- a stationary phase — a solid or coated surface;

- a mobile phase — a liquid or gas that carries the sample through the system.

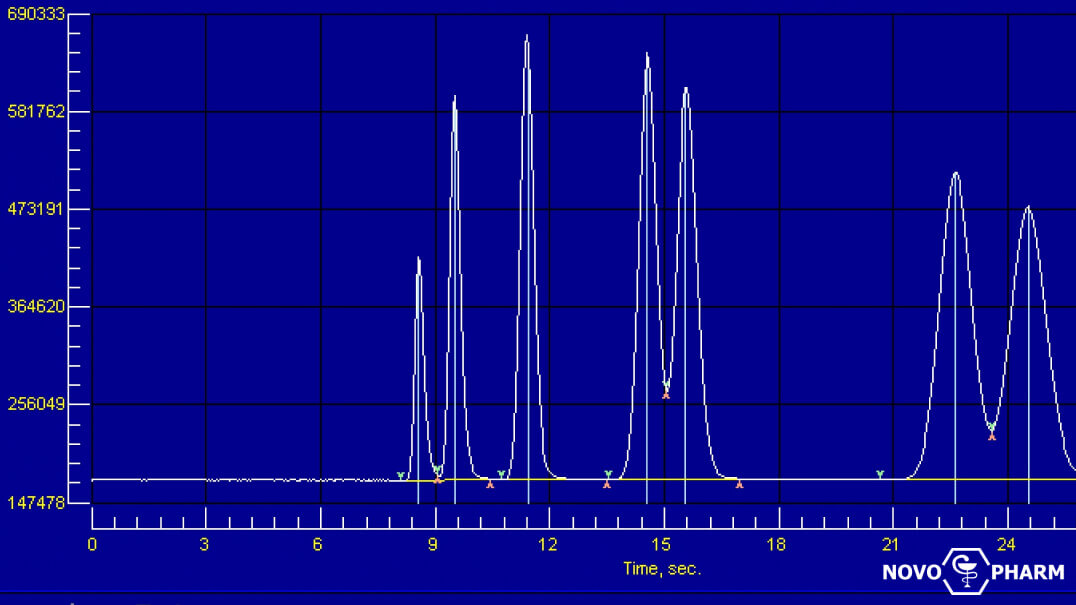

As the sample travels through the chromatographic column, each component moves at a different speed based on its chemical properties. This difference in movement creates distinct peaks on a chromatogram, allowing analysts to determine both the identity and purity of the substance being tested.

Chromatography is considered a gold standard in pharmaceutical quality control because it can detect impurities at extremely low concentrations, confirm the presence of the desired active compound, and provide reproducible, high-resolution results.

How chromatographic separation works

Chromatographic separation works by exploiting how differently molecules interact with two phases: a stationary phase inside the column and a mobile phase that carries the sample through it. Compounds that interact strongly with the stationary phase move slowly, while those with weaker interactions travel faster and elute earlier.

These differences in movement create distinct retention times. The detector records them as separate peaks on a chromatogram, where each peak represents a specific component of the sample. The size of the peak reflects how much of that component is present, allowing both identification and purity assessment.

How purity analysis is performed

A detector (UV, MS, or other) records the elution of each component over time, producing a chromatogram. The analyst evaluates:

- retention time — confirms the identity of the main compound;

- peak shape and symmetry — indicates proper separation and system performance;

- impurity peaks — reveal unintended byproducts, degradation products, or contaminants;

- peak area — used to calculate purity, typically expressed as a percentage

A high-purity material will show one dominant peak corresponding to the target compound, with minimal or no additional peaks. Deviations in peak pattern, unexpected retention times, or elevated impurity signals indicate compromised purity or stability.

Why chromatography is the gold standard for pharmaceutical purity testing

Chromatography is considered the gold standard in pharmaceutical analysis because it can separate, identify, and quantify individual components within a complex mixture with exceptional precision. Unlike simpler analytical methods, chromatography detects both the main active ingredient and even trace-level impurities that may affect safety, potency, or stability.

It provides high resolution, reproducible results, and compatibility with regulatory requirements such as USP, EP, and ICH guidelines. For this reason, it is the primary method used for release testing, stability studies, API verification, and impurity profiling across the pharmaceutical industry.

Common analytical errors and how they are corrected

| Issue | Likely Cause | Corrective Action |

| Poor peak shape (tailing/fronting) | Column wear, incorrect mobile-phase pH, contaminated sample | Replace/regenerate column; adjust pH; improve sample preparation |

| Co-elution (overlapping peaks) | Insufficient resolution; inappropriate stationary phase | Modify gradient; change column chemistry; adjust temperature or flow rate |

| Shifting retention times | Mobile-phase inconsistency; pump instability; temperature changes | Verify solvent preparation; calibrate pump; stabilize system temperature |

| Baseline noise or drift | Detector contamination; air bubbles; degraded solvents | Degas solvents; clean detector; replace mobile phase |

| Inaccurate quantification | Poor integration; degraded standards; incorrect calibration | Reintegrate peaks; prepare fresh standards; recalibrate instrument |

Key types of chromatography used in purity testing

HPLC (high-performance liquid chromatography)

HPLC separates analytes based on their differential interactions with a stationary phase under high-pressure liquid flow.

Applications: quantitative purity assessment, impurity profiling, and routine analysis of small-molecule APIs.

GC (gas chromatography)

GC volatilizes the sample and transports it through a temperature-controlled column via an inert carrier gas.

Applications: analysis of volatile substances, detection of residual solvents, and characterization of low-molecular-weight impurities.

UHPLC/LC-MS

UHPLC employs higher pressures and reduced particle-size columns, yielding superior resolution and faster separations. LC-MS integrates chromatographic separation with mass spectrometric detection for unambiguous molecular identification.

Applications: trace-level impurity detection, structural confirmation, and high-precision analytical workflows.

In a typical purity test, a small aliquot of the sample is dissolved in a suitable solvent and injected into the chromatographic system. The instrument then separates all constituents as they travel through the column under controlled conditions of flow, temperature, and pressure.

Conclusion

Thanks to its sensitivity, selectivity, and reproducibility, chromatography remains central to pharmaceutical quality testing. The technique can differentiate closely related molecules, quantify trace impurities, and meet rigorous pharmacopoeial requirements.

A clear understanding of how chromatographic systems operate, along with the strategies used to correct analytical errors, illustrates why this method continues to serve as the benchmark for purity verification across modern research and manufacturing environments.